Introduction to Kubernetes

Introduction

Kubernetes is a container orchestrator designed for applications that run on containers and need the following things:

- Service Discovery and Load Balancing

- Automatic Bin Packing

- Storage Orchestration

- Self Healing

- Secret and Configuration Management

- Automated Rollouts and Rollbacks

- Batch Execution

- Horizontal Scaling

Kubernetes is used in production environments running applications that should be available at all times, with a lot of variables to monitor. Kubernetes is also known as K8s and is a big tool with many fancy knobs and buttons, and this article will try to explain and demystify some basics of what Kubernetes is.

Why you should use it

In the general process of deploying applications, we encounter various issues with the way we want to get it working.

One problem with deploying applications in production before Kubernetes and other container orchestrators was the problem of managing resources. We had to spin up a server to deploy a new application.

A server, in principle, should only hold one application, including all of its dependencies, which would have to be manually deployed via some CI process every time we wanted to use it. This process was very stressful and required many technical skills to manage various things such as networking, security, storage, and all that would go to waste if the server became unresponsive and stopped working due to various factors.

Why Containers

Containers provide a good isolation level between the application and the machine running it. If the container running the application goes down, it doesn't affect the machine. Since this is the case, we can spin up several containers on a single machine without hoping for conflict.

Kubernetes provides this container management feature and the ability to run multiple servers in a cluster setup. Kubernetes can control these multiple machines by running a Control Plane, which defines how the master and slaves communicate with each other.

The various parts of the Kubernetes Control Plane, such as the Kubernetes Master and kubelet processes, govern how Kubernetes communicates with your cluster.

The Control Plane maintains a record of all of the Kubernetes Objects in the system and runs continuous control loops to manage those objects’ states.

At any given time, the Control Plane’s control loops will respond to changes in the cluster and work to make the actual state of all the objects in the system match the desired state that you provided.

Source: https://kubernetes.io/docs/concepts/#kubernetes-objects

Kubernetes Architecture

There are two cluster types in Kubernetes since it is generally for handling distributed workloads:

- Master

- Slaves

Master

This is what we use to talk to the slaves. In Kubernetes, the master is the one we communicate about what deployments should be on our cluster and how they should be managed. The master delegates work to the slaves, who are responsible for starting the containers and ensuring they function as desired.

The master does this using a collection of three processes:

- API server (kube-apiserver)

- Controller manager (kube-controller-manager)

- Scheduler (kube-scheduler)

The API server is what we use to perform duties from a client. The control plane is accessible to the outside world via this API server. From here, we can perform various operations inside and outside of the cluster using a client tool called Kubernetes Control (KubeCtl).

The Controller manager does all the checking and validation of the task we sent to the cluster using the API server. So things like making sure our deployment doesn't fail and that the general health of the cluster and things running on it are in great shape. It does this by continuously checking different resource objects on Kubernetes to ensure they function as expected.

The Scheduler is what enforces rules on the cluster. In Kubernetes, because we run many applications in different containers, we do not want just one to take over all the resources like RAM, CPU, and Storage and undermine others. The Scheduler is, therefore, the one that schedules these checks and ensures that any application joining on any slave can fit there, and if not, schedules it to another so that the workload on any slave is fairly shared amongst all of them.

Slaves

These nodes do the grunt work of running services and all the applications needs. The Scheduler is the one that pushes the requests to them, and they are then monitored by the controller manager and interacted with by us through the API Server over the master. In general, the slaves run two things:

- Kubelet

- Kube-Proxy

Kubelet is responsible for talking with the master node. It's what the master node uses to communicate and perform various actions, such as scheduling and monitoring.

Kube-proxy exposes the applications on the node. Just as Kubelet communicates with the master, kube-proxy communicates with the applications. Kube-proxy exposes the ports from the containers running to the outside world using Services.

Containers in Kubernetes

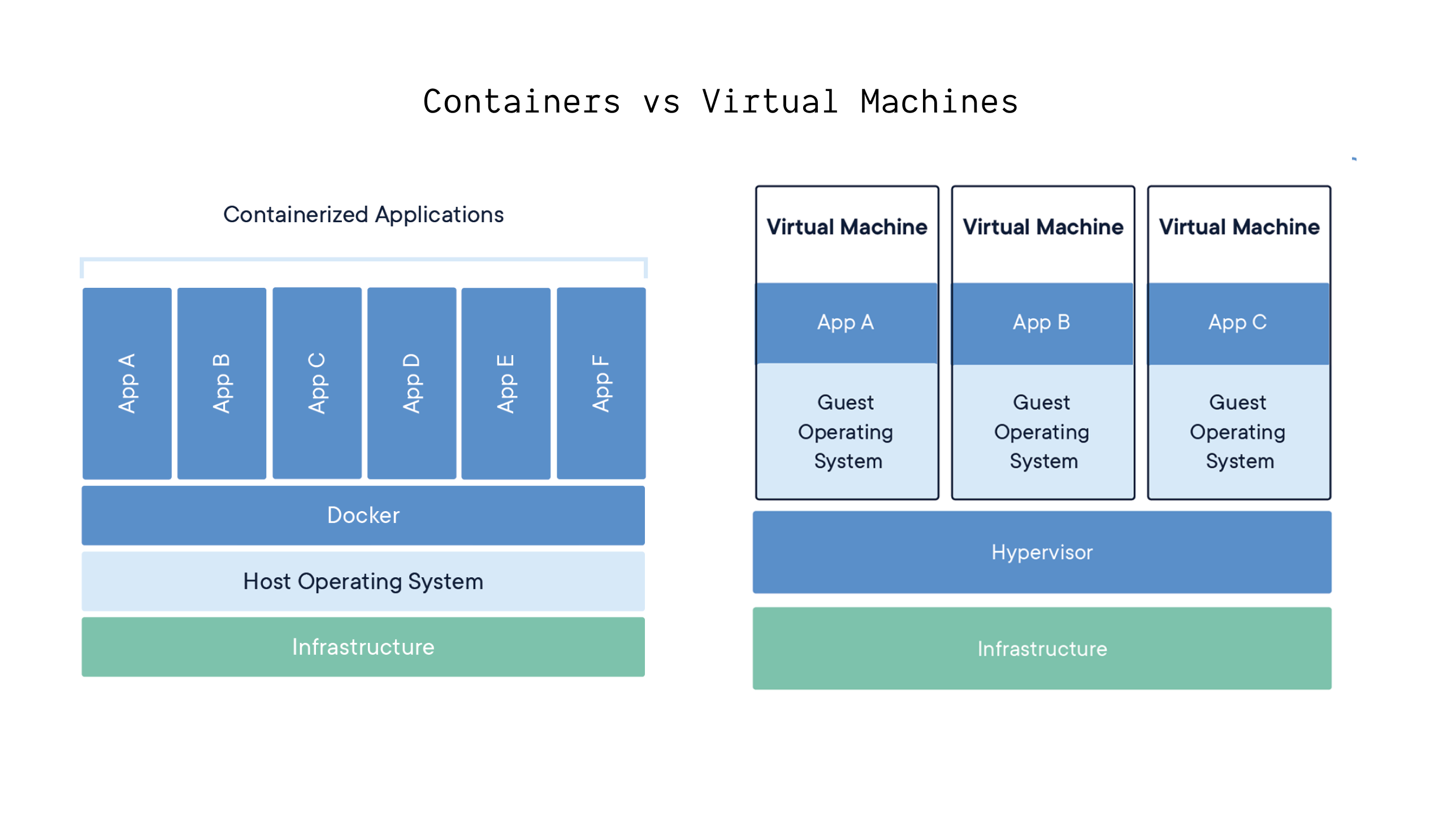

Containers running on bare metal vs VMs

We use containers because they allow us to run multiple applications on a single host machine. This is because containers run in sandboxes, making them more secure. After all, only the host can see them without one container being able to see or interfere with another. This is impossible if we ran all our applications on a single server.

Deployments and Containers

In Kubernetes, because we have different things like Networking, Storage, RAM, and CPU to manage amongst various applications that might or might not be similar, we interact with these various resources using Kubernetes Objects.

Kubernetes contains several abstractions that represent the state of your system: deployed containerized applications and workloads, their associated network and disk resources, and other information about what your cluster is doing.

These abstractions are represented by objects in the Kubernetes API

Source: https://kubernetes.io/docs/concepts/#kubernetes-objects

The basic Kubernetes objects include:

- Pod - Running Application

- Service - Networking and Port Allocation

- Volume - Storage and Disk Management

- Namespace - Sandbox For Running Multiple Versions

Also, Kubernetes contains several higher-level abstractions called Controllers. Controllers build upon the basic objects and provide additional functionality and convenience features. They include:

- ReplicaSet - Similar Pods Running Together

- Deployment - A higher group built on a collection of Pods which may or may not be similar

- StatefulSet - An application that has Volume requirements and should not start unless it is offered a separate and persistent space to store files

- DaemonSet - Just like a ReplicaSet, but this one should be present on all nodes running in the cluster

- Job - A short-running Pod, good for things like webhooks and email dispatchers

These and much more are the different types of Kubernetes objects. Filtering through all the big talk, we will only focus on deployments.

As earlier stated, a deployment is a collection of one or more pods that may or may not be similar depending on the task it is meant to accomplish. A pod is a running container that has been allocated resources. A pod on its own is never a good idea as it has limited functionality and cannot restart itself once it dies or an error occurs.

Deployments and various higher-order groups built on Pods allow us to run a certain number and keep a minimum number going so that our application never dies, even under the influence of a failed pod.

Exposing a Deployment With Services

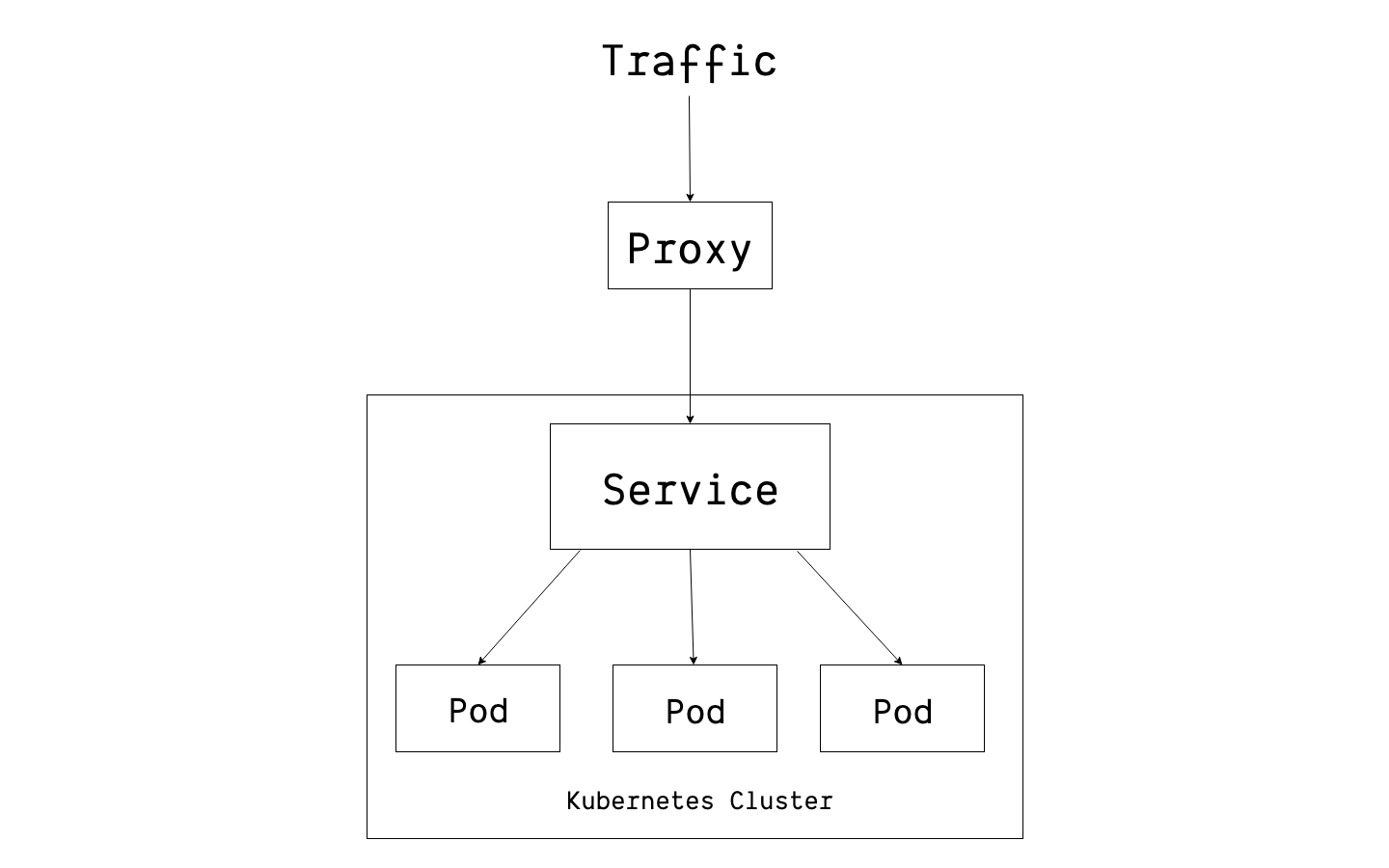

Kubernetes Service Pods

Just as a deployment runs one or more pods, we have to think about how to access it.

When we deploy applications, they are often accessible from a browser or client through a Port. The Port allows us to access multiple services on the same machine without worrying about conflict; a service allows us to access a deployment spread across different machines without worrying about where it is running.

This is made possible by the kube-proxy which can forward our request to the machine where it is deployed and send the response back through that same channel.

Updated 5 months ago